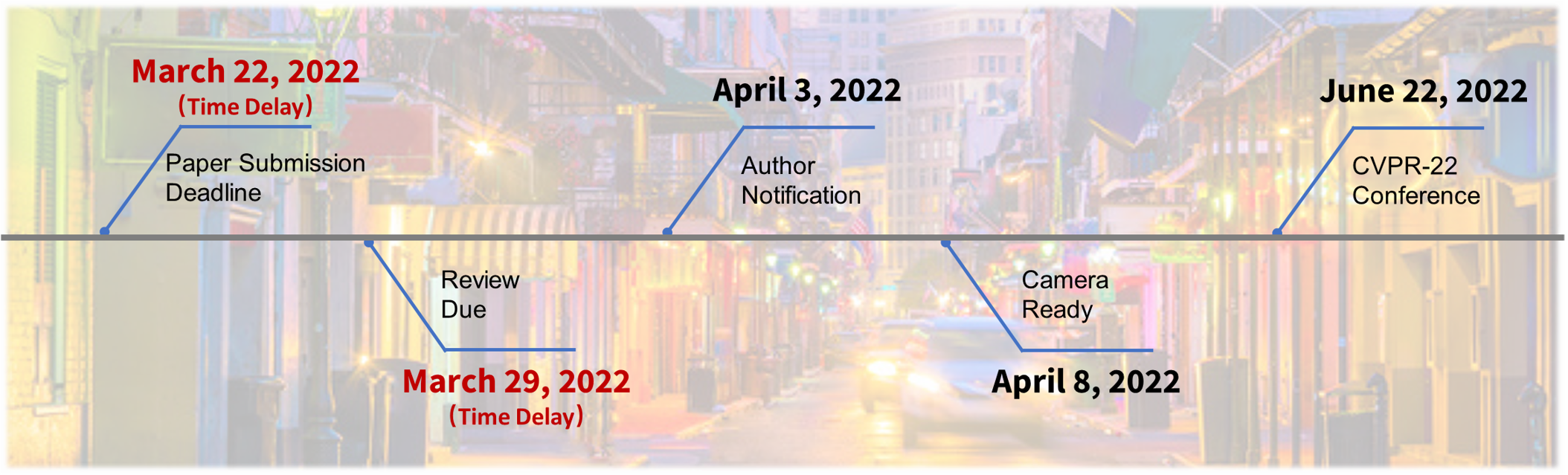

Important Dates

Deep learning has achieved significant success in multiple fields, including computer vision. However, studies in adversarial machine learning also indicate that deep learning models are highly vulnerable to adversarial examples. Extensive works have demonstrated that adversarial examples are serving as a devil for the robustness of deep neural networks, which threatens the deep learning based applications in both the digital and physical world.

Though harmful, adversarial attacks can be also shown as an angel for deep learning models. Discovering and harnessing adversarial examples properly could be highly beneficial across several domains including improving model robustness, diagnosing model blind spots, protecting data privacy, safety evaluation, and further understanding vision systems in practice. Since there are both the devil and angel roles of adversarial learning, exploring robustness is an art of balancing and embracing both the light and dark sides of adversarial examples.

In this workshop, we aim to bring together researchers from the fields of computer vision, machine learning, and security to jointly cooperate with a series of meaningful works, lectures, and discussions. We will focus on the most recent progress and also the future directions of both the positive and negative aspects of adversarial machine learning, especially in computer vision. Different from the previous workshops on adversarial machine learning, our proposed workshop aims to explore both the devil and angel characters for building trustworthy deep learning models.

Overall, this workshop consists of invited talks from the experts in this area, research paper submissions, and a large-scale online competition on building robust models. In particular, the competition includes two tracks as (1) Building digital robust classifiers on ImageNet and (2) Building physical robust adversarial detectors on open-set.

Timeline is released now! Click to visit.

| ArtofRobust Workshop Schedule | |||

| Event | Start time | End time | |

| Opening Remarks | 8:50 | 9:00 | |

| Invited talk: Yang Liu | 9:00 | 9:30 | |

| Invited talk: Quanshi Zhang | 9:30 | 10:00 | |

| Invited talk: Baoyuan Wu | 10:00 | 10:30 | |

| Invited talk: Aleksander Mądry | 10:30 | 11:00 | |

| Invited talk: Bo Li | 11:00 | 11:30 | |

| Poster Session (click) | 11:30 | 12:30 | |

| lunch (12:30-13:30) | |||

| Oral Session | 13:30 | 14:10 | |

| Challenge Session | 14:10 | 14:30 | |

| Invited talk: Nicholas Carlini | 14:30 | 15:00 | |

| Invited talk: Judy Hoffman | 15:00 | 15:30 | |

| Invited talk: Alan Yuille | 15:30 | 16:00 | |

| Invited talk: Ludwig Schmidt | 16:00 | 16:30 | |

| Invited talk: Cihang Xie | 16:30 | 17:00 | |

| Join our workshop (click)!!! | |||

| June 19, 2022 (9:00-17:30) | |||

| New Orleans time zone (UTC/GMT -5) | |||

|

Alan |

|

|

Yang |

|

|

Aleksander |

Massachusetts Institute of Technology |

|

Nicholas |

|

|

Bo |

|

|

Quanshi |

Shanghai Jiaotong University |

|

Ludwig |

University of Washington |

|

Cihang |

Peking University |

|

Judy |

Georgia Tech |

|

Baoyuan |

Chinese University of Hong Kong (Shenzhen) |

|

Aishan |

Beihang |

|

Florian Tramèr |

ETH Zürich and Google Brain |

|

Francesco Croce |

University of Tübingen |

|

Jiakai |

Beihang |

|

Yingwei |

Johns Hopkins University |

|

Xinyun |

UC Berkeley |

|

Cihang |

UC Santa Cruz |

|

Bo |

UIUC |

|

Xianglong |

Beihang University |

|

Xiaochun |

Sun Yat-sen University |

|

Dawn |

UC Berkeley |

|

Alan |

Johns Hopkins University |

|

Philip |

Oxford University |

|

Dacheng |

JD Explore Academy |

Deep learning models are vulnerable against noises, e.g., adversarial attacks, which poses strong challenges on the deep learning-based applications in the real-world scenario. A large number of defenses have been proposed to mitigate the threats, however, most of them can be broken and the robustness on large-scale datasets is still far from satisfactory.

To accelerate the research on building robust models against noises, we organize this challenge track for motivating novel defense algorithms. Participants are encouraged to develop defense methods that could improve model robustness against diverse noises on large-scale dataset.

Most defense methods aim to build robust model in the closed set (e.g., under fixed datasets with constrained perturbation types and budgets). However, in the real-world scenario, adversaries would bring more harms and challenges to the deep learning-based applications by generating unrestricted attacks, such as large and visible noises, perturbed images with unseen labels, etc.

To accelerate the research on building robust models in the open set, we organize this challenge track. Participants are encouraged to develop a robust detector that could distinguish clean examples from perturbed ones on unseen noises and classes by training on a limited-scale dataset.

Challenge Chair

|

Siyuan |

Chinese Academy of Sciences |

|

Zonglei |

Beihang |

|

Tianlin |

Nanyang Technological University |

|

Haotong |

Beihang |